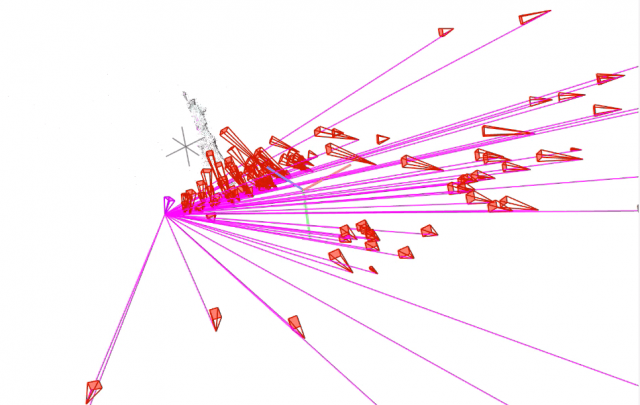

A team of researchers from the Virginia Polytechnic Institute and State University (Virginia Tech) have developed Source Form, a 3D printer capable of creating objects using crowdsourced data. Displayed at the recent ACM SIGGRAPH 2019, held in Los Angeles, California, Source Form creates 3D model input by stitching together database images through photogrammetry. As more images are uploaded to databases over time, the machine becomes increasingly more accurate.

The Source Form project has received support from Virginia Tech Institute of Creativity, Arts and Technology (ICAT), and the National Science Foundation (NSF). In a study discussing its construction, authors sate, “This project demonstrates that an increase in readily available image data closes the gap between physical and digital perceptions of form through time.”

“For example, when Source Form is asked to print the Statue of Liberty today and then print again 6 months from now, the later result will be more accurate and detailed than the previous version.”

3D printing objects without an .stl

To 3D print using the Source Form device, users input the name of a desired object. The device then sifts through text-based image results of the item, where users provide feedback regarding the accuracy of the retrieved contents. After this step, Source Form completes a reverse image search to obtain further relevant images of the same object on the internet.

The machine is built with robust feature extraction and matching algorithms for sorting through and identifying the target object. After corresponding features from different images, the system estimates the 3D structure using motion and multi-view stereo techniques using Structure-from-Motion (SfM) software COLMAP. It then constructs a 3D mesh model based on the extracted point cloud data.

Prior to 3D printing the object, the mesh model needs to be processed, refined and repaired. This includes thickening of the surface in order to create printable features, and the elimination of those that are too small. Once completed, the system then slices the voxel-based geometry into bitmap images of each voxel layer, which can then be sent to a 3D printer.

The technology currently compatible with this method is SLA, When the research team were selecting the 3D printing process to use once the model is generated, material jetting and powder bed fusion 3D printing were eliminated due to their high cost. Extrusion-based 3D printers were also deemed too slow, with low-resolution and poor surface finishes.

A significant element of the Source Form project is the change in 3D prints over time. As people continue to upload images to social media, blogs and photo sharing sites, the system gets more accurate at compiling the data and created a desired object. “Because Source Form gathers a new dataset with each print,” authors explain, “the resulting forms will always be evolving.” A collection of 3D prints of the same object over time is cataloged and displayed by the team to demonstrate growth and change in a physical space.

Rethinking digital creation

Photogrammetry, and the production of point cloud data as is used in some 3D scanners too, is enabling news ways to think about 3D printing, and digital reconstruction.

In 2018, Neri Oxman and colleagues Dominik Kolb, James Weaver and Christoph Bader at the Massachusetts Institute of Technology’s (MIT) Mediated Matter group filed a patent for a method capable of converting point cloud data into a voxel-by-voxel 3D printing file.

In the #NEWPALMYRA initiative, Palestinian Syrian open-source software developer Bassel Khartabil, started to digitally recreate the city of Palmyra using collected digital photographs, following its destruction by ISIL in 2015. The first 3D model ever to be uploaded to Wikipedia Commons, once it began allowing contributors to upload them, was the Asad Al-Lat statue, a sculpture resurrected from the ruins of Palmyra. It was selected in memory of Khartabil who was executed for his activism by the Syrian government in 2015.

“Source form an automated crowdsourced object generator” is published in the ACM Digital Library. It is co-authored by Sam Blanchard, Jia-Bin Huang, Christopher B. Williams, Viswanath Meenakshisundaram, Joseph Kubalak, and Sanket Lokegaonkar.

Subscribe to the 3D Printing Industry newsletter for the latest news in additive manufacturing. You can also keep connected by following us on Twitter and liking us on Facebook.

Looking for a career in additive manufacturing? Visit 3D Printing Jobs for a selection of roles in the industry.

Featured image shows Source Form: an automated crowdsourced object generator.