Work by MIT researchers may make drones, robots and self-driving cars both more affordable and also improve their performance. Successful commercialization of a new lidar-on-a-chip could bring the cost of improved 3D scanning within the range of Makers.

Most of us are fortunate enough to take for granted the ability to automatically absorb information about the world around us and navigate through whatever landscape we find ourselves in. Whether the environment is a crowded city street or an uneven costal path, the combination of our senses and brain ensure we (mainly) avoid objects and stay upright.

Recreating this ability in machines is a challenge that advancements in silicon photonics may help address this.

Sending data using light

In an article for IEEE Spectrum’s Tech Talk blog MIT researchers, Christopher V. Poulton and Prof. Mike R. Watts describe silicon photonics as:

“A chip technology that uses silicon waveguides a few hundred nanometers in cross section to create “wires for light,” with properties similar to optical fibers except on a much smaller scale.”

These waveguides can be built into a chip and perform a similar function to the wires and connectors seen in traditional electrical engineering. The difference is that light, rather than electricity, transmits data, switches circuits and performs the other tasks commonly carried out by a chip.

The advantage of using light is the lower power requirement when compared to sending data with electricity via copper connectors. Photonic applications are still emerging and widespread use is increasingly likely now traditional microchip manufacturers are adapting mass production facilities for the technology.

These manufacturers include companies such as Intel and Luxtera who have spent almost 15 years developing silicon photonics. Luxtera were the first to bring a mass-produced product to market and the company refer to the technology as CMOS photonics.

Work done at MIT’s Photonic Microsystems Group by Poulton and Watts, “develops microphotonic elements, circuits, and systems for a variety of applications, including communications, sensing, and coupled microwave-photonic circuits.” Earlier this month the group announced success in a DARPA backed project to use silicon photonics to enable lidar-on-a-chip.

Lidar vs. radar and Google vs. Tesla

Radar uses radio waves to build a picture of the surrounding environment. Solid objects in the path of the radio wave may reflect or disrupt the wave and detection of these events can be used to build up a picture of the environment.

Lidar uses a similar concept, the reflection of a transmitted signal, but rather than a radio wave a lidar unit sends out a light wave. The systems full name is “LIght Detection and Ranging.” The light can be infrared, ultraviolet, laser or from another source.

Both methods can be used to identify the location of an object in a space. Through harnessing the Doppler shift a calculation of the object’s speed and direction of travel can also be performed. The police have used lidar speed guns since 1989 for that very purpose.

“Radio waves are created by the acceleration of electrons in a radio antenna, and light waves are created by the oscillations of the electrons within atoms,” writes Frank Wolfs on the differences between the two. Furthermore, because light waves operate at smaller wavelengths, approximately 100,000 times smaller, a greater level of detail in describing an environment or object is possible.

For this reason Lidar is acknowledged as offering greater precision, but the technology can be disadvantaged by adverse weather conditions such as rain or fog.

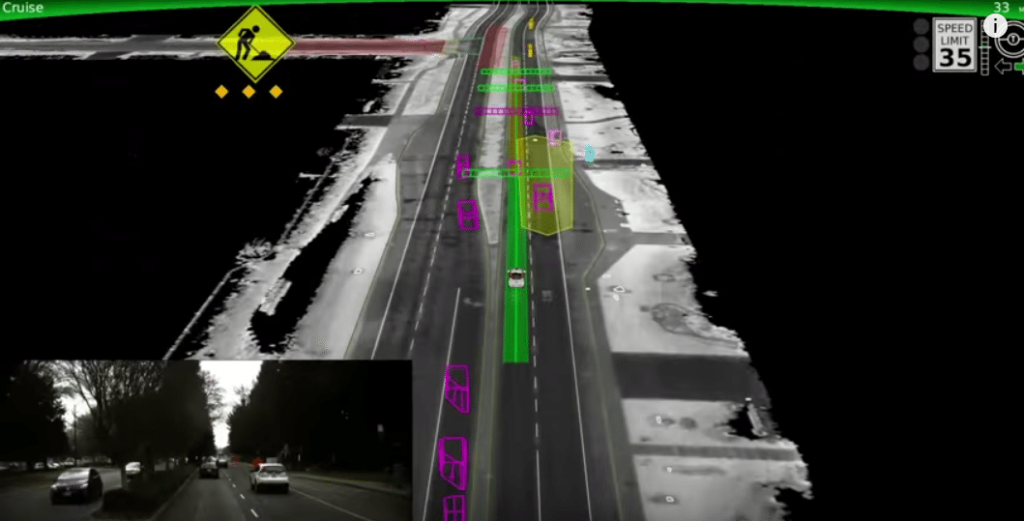

Google’s self-driving cars depend upon lidar to understand their surroundings. The lidar unit is mounted in a distinctive beacon on the car’s roof and scans 60 meters in all directions. The equipment in use by Google can cost up to $70,000 per unit according to The Guardian newspaper.

This high cost may partly explain why rival self-driving car company, Tesla remain committed to the use of radar. Tesla CEO, Elon Musk, has repeatedly expressed a preference for a system that uses radar, cameras, ultrasound and other sensors saying lidar, “doesn’t make sense” for a self-driving car.

As recently as July, Musk was tweeting about his thoughts on the issue and wrote, “Working on using existing Tesla radar by itself (decoupled from camera) w temporal smoothing to create a coarse point cloud like lidar.” This tweet came several weeks after the sad news that a Tesla Model S owner was killed while operating his vehicle in autopilot mode.

Lidar was used to collect the data for the visuals in this Radiohead video:

Current lidar units are mechanical systems that have multiple components, including lenses and devices to spin the transmitter and receiver. This introduces complexity into the system as there are more points of potential failure. It also makes the units bulky and limits the scan rate, slowing down the detection of objects.

The MIT device is a “0.5 mm x 6 mm silicon photonic chip with steerable transmitting and receiving phased arrays and on-chip germanium photodetectors.” The laser is not part of the device, but research has shown that future integration is feasible.

The lidar-on-a-chip works by controlling the phase of an integrated antenna. This is done by heating the waveguides, and as the silicon is heated its properties change. Specifically the refractive index, or how light moves through a material, changes.

As the light moves through the chip it passes over a notch designed to act as an antenna and scatter the beam. These antennas have a distinct, “emission pattern, and where all of the emission patterns constructively interfere, a focused beam is created without a need for lenses.”

The researchers say the chip is, “1,000 times faster than what is currently achieved in mechanical lidar systems.” For a high-speed autonomous device such as a drone this could be very useful as it allows small, fast moving objects to be accurately tracked, and avoided.

Presently, the device has a range of 2 meters and the researchers plan to increase this to 10 meters by the end of the year. While current lidar systems can cost from $1,000 to $70,000, because MIT’s lidar chips use the fabrication techniques found in existing semiconductor factories the researchers believe a production price of $10 is achievable.

In his role as CTO of AIM Photonics Professor Watts will likely have a part to play in bringing this technology to market, with commercial lidar-on-a-chip likely to arrive, “in a few years.”

The researchers believe the high resolution imaging possible with lidar-on-a-chip means that applications could include sensors on a robot’s fingers. It is also easy to envision how affordable lidar could serve in the automated factories of the future, for example providing high quality data in a smart factory under Industry 4.0.

For those with less industrial ambitions, then mass production of the chips leading to a low price point might mean incorporating lidar into Maker projects.