The 2021 3D Printing Industry Awards shortlists are now open for voting until the 20th of October. Cast your votes here.

Researchers from Lehigh University, Pennsylvania, have developed a novel machine learning-based approach to classifying groups of materials together based on structural similarities.

In what the team believes to be the first study of its kind, an artificial neural network was used to identify structural similarities and trends in a vast database of over 25,000 microscopic images of materials. The technique can be used to find previously unseen links between newly-developed materials and even correlate factors such as structure and properties, potentially giving rise to a new method of computational material development for sectors such as 3D printing.

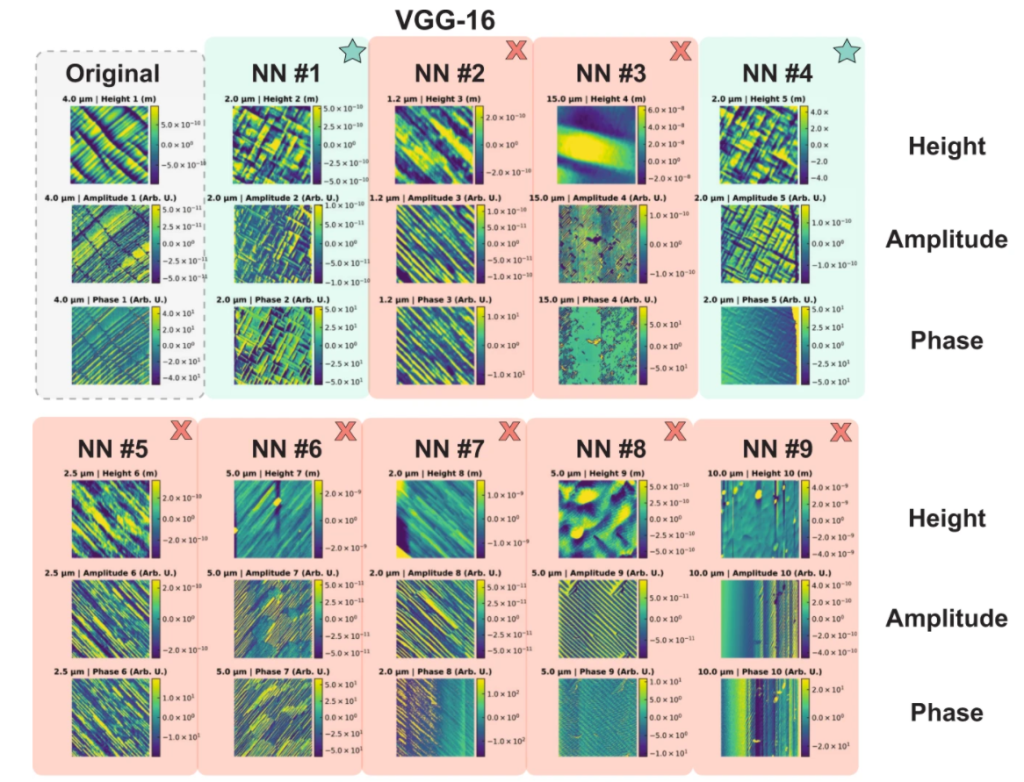

Joshua Agar, a lead author of the study, describes how the model’s ability to detect structural symmetry was a cornerstone of the project’s success. He said, “One of the novelties of our work is that we built a special neural network to understand symmetry and we use that as a feature extractor to make it much better at understanding images.”

The relationship between structure and properties

In materials research, understanding how the structure of a material impacts its properties is a key goal. Still, due to the complexity of structure, there are currently no widely-used metrics for reliably determining exactly how the structure of a material will affect its properties. With the rise of machine learning technology, artificial neural networks have proven themselves to be a potential tool for this application, but Agar still believes there are two major challenges to overcome.

The first is that the vast majority of data produced by materials research experiments is never analyzed by machine learning models. This is because the results generated, often in the form of microscopic imaging, are rarely stored in a structured and usable manner. Results also tend not to be shared between laboratories, and there certainly isn’t a centralized database that can easily be accessed. This is an issue in materials research in general, but even more so in the additive manufacturing sector due to the greater niche.

The second issue is that neural networks just aren’t very effective at learning how to identify structural symmetry and periodicity – how periodic a material’s structure is. Since these two features are crucial for materials researchers, using neural networks has posed a great challenge until now.

Similarity projections via machine learning

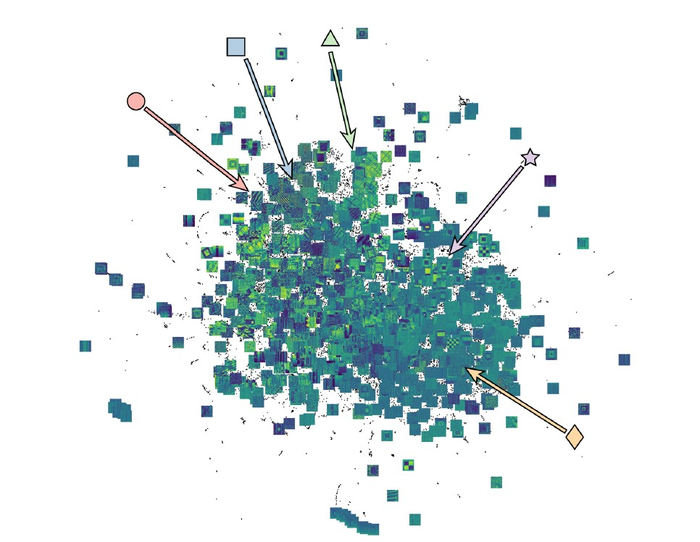

Lehigh’s novel neural network is designed to solve both of the issues described by Agar. As well as being able to understand symmetry, the model is capable of searching unstructured image databases to identify trends and project similarities between images. It does so by employing a non-linear dimensionality reduction technique called Uniform Manifold Approximation and Projection (UMAP).

Agar explains that the approach made the higher-level structure of the data more digestible for the team: “If you train a neural network, the result is a vector, or a set of numbers that is a compact descriptor of the features. Those features help classify things so that some similarity is learned. What’s produced is still rather large in space, though, because you might have 512 or more different features. So, you want to compress it into a space that a human can comprehend such as 2D or 3D.”

The Lehigh team trained the model to include symmetry-aware features and used it on an unstructured set of 25,133 piezoresponse force microscopy images collected over the course of five years at UC Berkeley. As such, they were able to successfully group similar materials together based on structure, paving the way to a better understanding of structure-property relationships.

Ultimately, the work showcases how neural networks, combined with better data management, could expedite materials development studies for both additive manufacturing and the wider materials community.

Further details of the study can be found in the paper titled ‘Symmetry-aware recursive image similarity exploration for materials microscopy’.

The predictive power of machine learning is really starting to be utilized in many aspects of additive manufacturing. Researchers from Argonne National Laboratory and Texas A&M University have previously developed an innovative approach to defect detection in 3D printed parts. Using real-time temperature data, together with machine learning algorithms, the scientists were able to make correlative links between thermal history and the formation of subsurface defects.

Elsewhere, in the commercial space, engineering firm Renishaw partnered with 3D printing robotics specialist Additive Automations to develop deep learning-based post-processing technology for metal 3D printed parts. The partnership involves using collaborative robots (cobots), together with deep learning algorithms, to automatically detect and remove support structures in their entirety.

Subscribe to the 3D Printing Industry newsletter for the latest news in additive manufacturing. You can also stay connected by following us on Twitter, liking us on Facebook, and tuning into the 3D Printing Industry YouTube Channel.

Looking for a career in additive manufacturing? Visit 3D Printing Jobs for a selection of roles in the industry.

Featured image shows the comparison of UMAP-projections using natural image and symmetry-aware features. Image via Lehigh University.