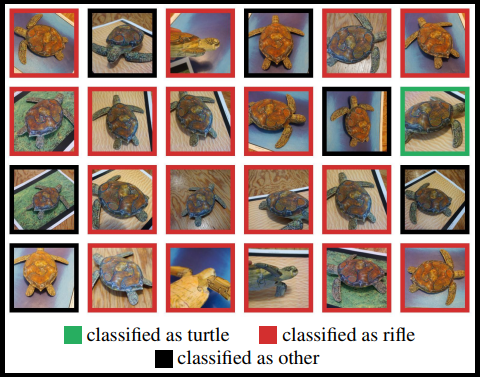

Researchers from the Massachusetts Institute of Technology (MIT) and the University of California (UC), Berkeley have used 3D printed objects, including a turtle, to test an Artificial Intelligence (AI) image recognition algorithm.

Although identifiable as a turtle by the human eye, the AI algorithm recognized the 3D model as a rifle, demonstrating the software security flaws within advanced machine learning technologies.

Concerned about the security implications for high tech electronics, Aleksander Madry, a Computer Scientist at MIT, comments, “We need to rethink all of our machine learning pipeline to make it more robust.”

An adversarial attack

Last week, at the International Conference on Machine Learning (ICML) in Stockholm, the MIT/UC Berkley team presented their study titled “Synthesizing Robust Adversarial Examples” in a panel on adversarial attacks.

Adversarial attacks are subtly modifiable images, objects, or sound inputs intentionally designed to fool AI models. This process, similar to hacking, is used to identify flaws in machine learning systems, thus, finding new ways to defend and improve them.

Under the umbrella of Industry 4.0, a recent review led by Siemens UK has encouraged companies to embrace AI, robotics, and autonomous systems because of its estimated £455 billion contribution to the UK’s economy. With such emphasis in place on the technology, experiments must be put in place to evaluate and develop its safety..

“[The attacks are] a great lens through which we can understand what we know about machine learning,” stated Dawn Song, a Computer Scientist at UC Berkeley.

Spoofing the system

Researchers conducting the adversarial attacks began by experimenting with stickers placed on stop signs. This confused an image recognition AI algorithm into thinking that a standard stop sign was a 45 mile per hour speed limit sign – a potential disaster for autonomous cars using this technology.

Following this, the team used a 3D printed turtle model for its next test. The AI algorithm captured images of the model, then the researchers gradually changed the image’s gradients and positioning to create an incorrect output. This resulted in the algorithm wrongly classifying the 3D printed turtle model as a rifle.

Using the same process, the research team was able to trick the AI algorithm into recognizing a 3D printed baseball as an espresso.

“We in the field of machine learning just aren’t used to thinking about this from the security mindset,” said Anish Athalye, a Computer Scientist at MIT who co-led the study.

AI developers associated with this study are now working on strengthening algorithm defenses. This can be done through image compression which adds jaggedness to otherwise smooth gradients in AI algorithms, outwitting adversarial attacks.

Keep up with the latest 3D printing news and research by subscribing to the 3D Printing Industry newsletter. Also, follow us on Twitter, and like us on Facebook.

Looking for new talent or seeking a career change? Search and post 3D Printing Jobs for opportunities and new talent across engineering, marketing, sales and more.

Featured image shows the 3D printed turtle model used to fool an AI algorithm. Photo via Anish Athalye.